KNOWLEDGE BASE

Python Development

I have a strong foundation in Python, with solid understanding of core programming fundamentals and Object-Oriented Programming (OOP) concepts. My experience includes working with common modules and packages, managing dependencies using pip and uv, and performing advanced data processing with Pandas and DuckDB. I’m skilled in reading and writing structured and semi-structured data formats, including CSV, JSON, and XML. My projects often involve database interaction through SQLAlchemy ORM and psycopg2, enabling seamless integration between Python applications and relational databases.

I also have experience in web scraping from both static sites using BeautifulSoup and dynamic, JavaScript-rendered sites using Playwright. To validate my expertise, I hold Associate Data Engineer and Certified Data Engineer certifications from DataCamp, which reflect my capability to build efficient, production-ready data workflows in Python.

SQL & Database Concepts

I have a solid understanding of relational database fundamentals, including writing and optimizing SQL queries for data retrieval and manipulation. I’m familiar with core concepts such as tables, relationships, indexes, and constraints, and I can work with stored procedures and views at a basic level. My skills include monitoring query performance using SQL Profiler to identify and troubleshoot inefficiencies, as well as performing backup and restore operations to ensure data integrity and recovery. This foundational knowledge allows me to maintain reliable database environments and support application data needs.

Cloud Concepts and Resources

I have hands-on experience deploying and managing applications on AWS using Amazon ECS Fargate and EC2. My typical workflow involves building Docker images, pushing them to Amazon ECR, and running containerized applications through ECS for scalable, reliable service delivery. I’m also experienced in managing relational databases in the cloud with Amazon RDS for PostgreSQL, including setup, configuration, and basic administration. To support my practical skills, I hold the AWS Certified Cloud Practitioner certification, validating my knowledge of AWS services, cloud concepts, security, and cost optimization.

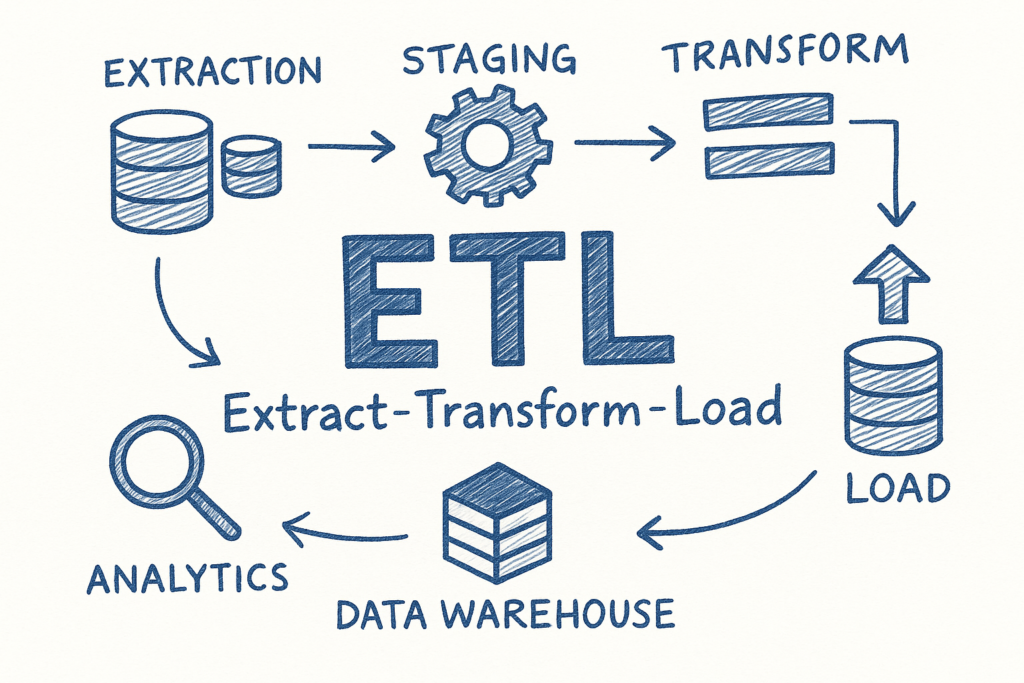

ETL(Extract-Transform-Load) Concepts

My knowledge of data integration covers both Extract, Transform, Load (ETL) and Extract, Load, Transform (ELT) methodologies. This includes extracting data from sources such as CSV, JSON, and relational databases; applying cleaning, validation, and formatting steps; and loading it into target systems for storage or analysis. I understand the distinctions between ETL’s transform-before-load approach and ELT’s transform-after-load approach, and how to apply each effectively depending on the system architecture.

I am skilled in using Python libraries like Pandas for transformation tasks, leveraging SQL for in-database processing, and orchestrating data pipelines using Dagster. Additionally, I am familiar with the Medallion Architecture (Bronze–Silver–Gold layers) to structure data workflows—organizing raw data in the Bronze layer, applying cleansing and enrichment in the Silver layer, and delivering business-ready datasets in the Gold layer for analytics and reporting.

I also have experience creating Grafana dashboards to monitor pipeline performance and visualize key metrics, enabling better operational insight and proactive issue resolution.

Networks

Understanding of networking fundamentals includes how systems exchange information across local and wide area networks. Core concepts cover IP addressing, DNS resolution, HTTP/HTTPS communication, and the function of ports in enabling application connectivity. Familiarity with secure transfer protocols such as SFTP supports safe and efficient data exchange. Knowledge of security measures like firewalls and encryption helps ensure that applications remain both accessible and protected in on-premises and cloud-based environments.

Containerization

Knowledge of containerization includes using Docker to package applications with their dependencies into portable, isolated environments. This involves building and configuring custom images, running containers locally or in the cloud, and managing multi-container setups. Understanding how containerization streamlines deployment ensures consistent application behavior across development, testing, and production. Familiarity with integrating Docker into CI/CD pipelines, including GitHub Actions, enables automated builds, testing, and deployments, resulting in faster and more reliable delivery of containerized applications.